[Paper Review]Skip-GANomaly

2020. 8. 12. 11:41ㆍDeepLearning/GAN

- Skip-GANomaly: Skip Connected and Adversarially Trained Encoder-Decoder Anomaly Detection, 2019.

- Authors: Samet Akçay, Amir Atapour-Abarghouei, Toby P. Breckon

- Conference: 2019 International Joint Conference on Neural Networks (IJCNN)

- Github: Link

- Summary:

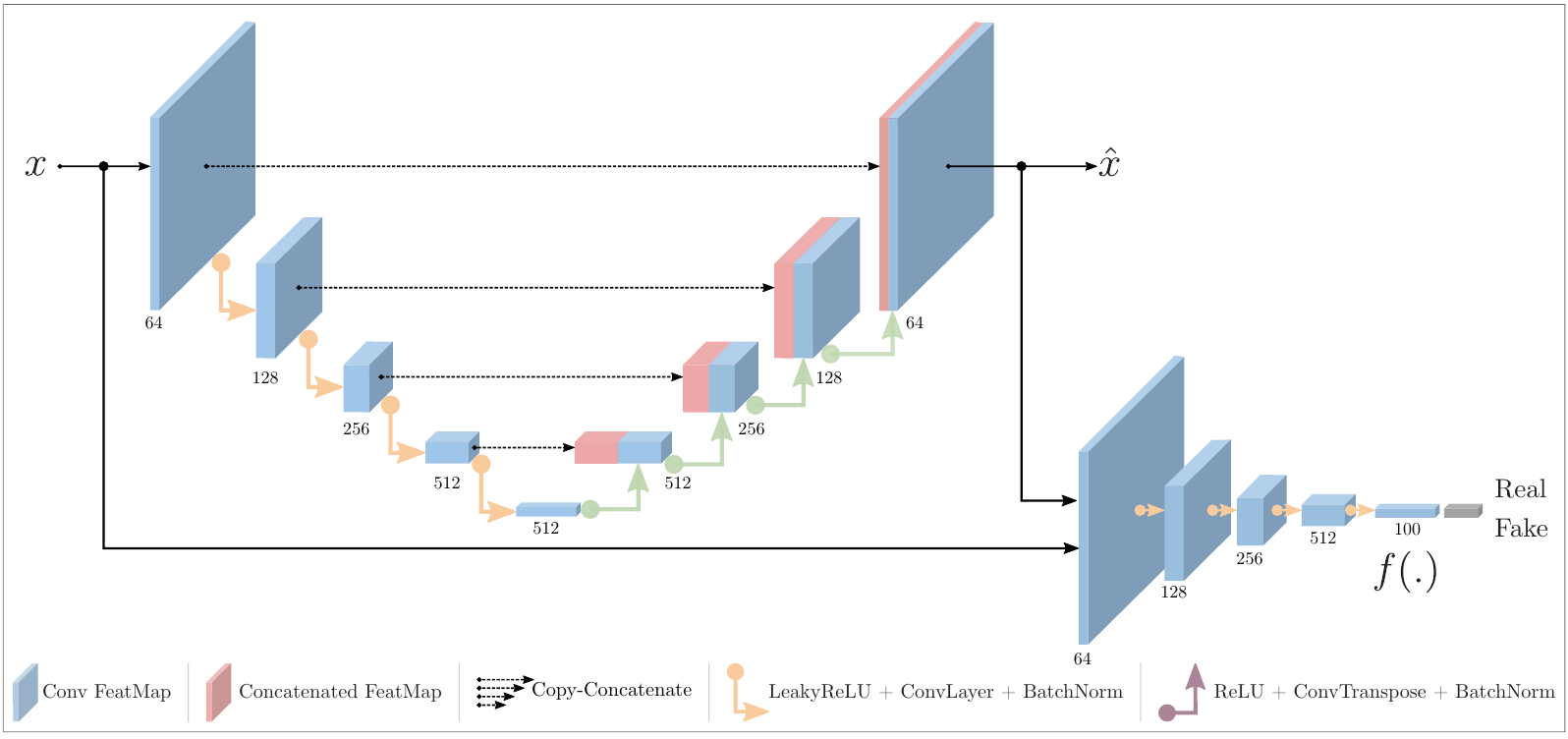

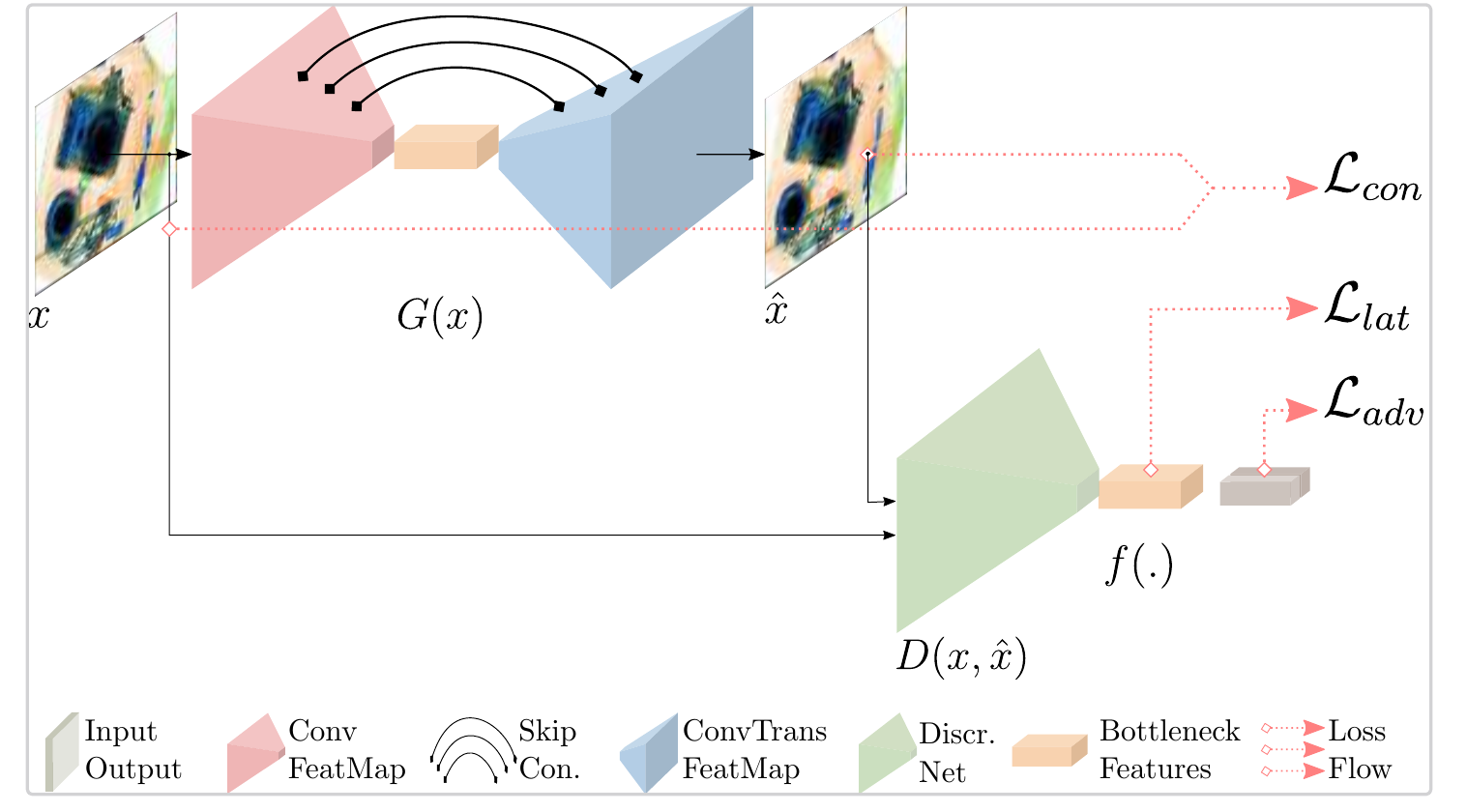

- an U-net like encoder-decoder convolutional neural network with skip-connections and utilized adversarial training scheme

- the role of skip connections in generator

- more stable training

- Adversarial Training

- AAE(Adversarial Auto Encoder)

- superior reconstruction

- superior capability of controlling the latent space

- AAE(Adversarial Auto Encoder)

- the role of skip connections in generator

- loss functions (Adversarial + Contextual + Latent)

- Adversarial loss: log(D(x) + log(1 - D(x_fake))

- Contextual loss: ||x - x'||

- Latent loss (discriminator feature loss): || f(x) - f(x')||

- followed same structure as the discriminator of the DCGAN

- Inference based on reconstruction error

- contextual similarity: ||x - x'||

- latent representation score: || f(x) - f(x')||

- apply feature scaling to the Anomaly score within the probabilistic range of [0,1]

- Limitations

- The model generates even abnormal samples

- Though the proposed model is able to detect abnormality within latent object space

- The model generates even abnormal samples

- an U-net like encoder-decoder convolutional neural network with skip-connections and utilized adversarial training scheme

Architecture

Thoughts

- normal/abnormal을 나누기 쉬운 문제에만 적용 가능 할 것 같다.

- 학습 때 사용하지 않은 abnormal sample도 생성

- skip-connection을 reconstruction error based anomaly detection에 도입하는 건 한계가 존재한다.

'DeepLearning > GAN' 카테고리의 다른 글

| [Paper Review] GANSpace: Discovering Interpretable GAN Controls (0) | 2020.08.27 |

|---|---|

| [Paper Review] GANomaly: Semi-Supervised Anomaly Detection via Adversarial Training (0) | 2020.08.10 |

| [Paper Review] f-AnoGAN: Fast unsupervised anomaly detection with generative adversarial networks (0) | 2020.08.09 |

| [Paper review] StyleGAN2 (0) | 2020.07.28 |

| [Paper Review] StyleGAN (0) | 2020.07.27 |