[Paper Review] GANomaly: Semi-Supervised Anomaly Detection via Adversarial Training

2020. 8. 10. 15:13ㆍDeepLearning/GAN

GANomaly

Semi-supervised anomaly detection via adversarial training (2018)

- Authors: Samet Akcay, Amir Atapour-Abarghouei , and Toby P. Breckon

- Conference: Asian Conference on Computer Vision (622--637)

- Organization: Springer

- Summary:

- Semi-supervised anomaly detection

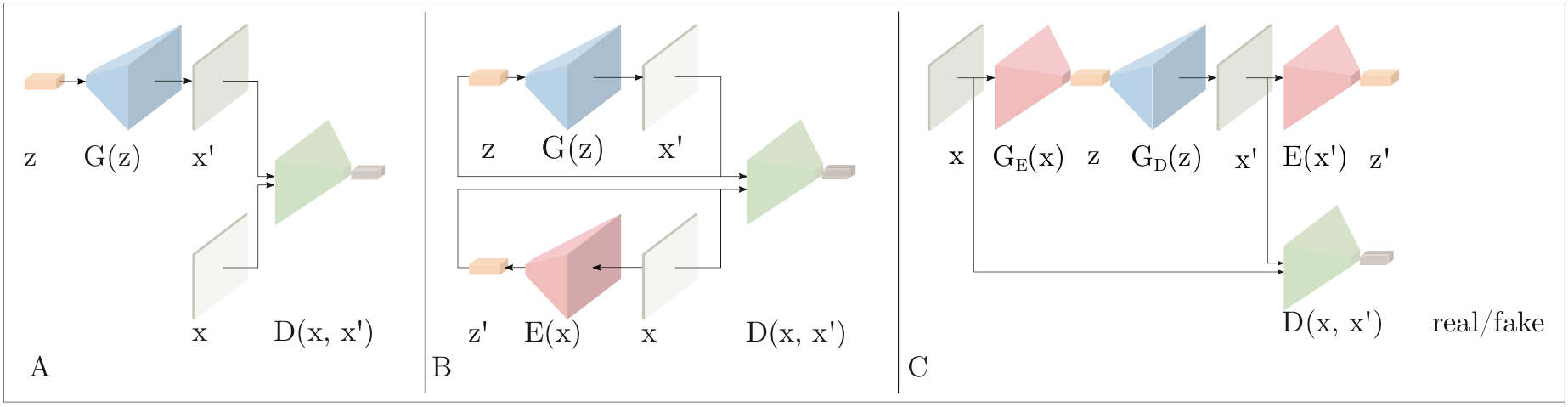

- Architecture:

- Conditional GAN

- an adverasarial autoencoder within an Encoder-Decoder-Encoder pipeline

- Anomalies are detected when the output of the model is greater than a certain threshold A(x) > φ.\

- The official code is available: Link

Related Works

- AnoGAN

- Hypothesis: The latent vector of the GAN represents the distribution of the data

- Computationally expensive method: Iterative mapping to the vector space of the GAN

- BiGAN in an anomaly detection task

- joint training to map from image space to latent space simultaneously, and vice-versa.

- Adversarial Auto-Encoders (AAE)

- Training autoencoders with adversarial setting

- Better reconstruction and Control over latent space

Architecture

Training

Hypothesis

- When an abnormal image is forward-passed into the network G, G_D is not able to reconstruct the abnormalities even though G_E manages to map the input X to the latent vector z.

Loss Functions

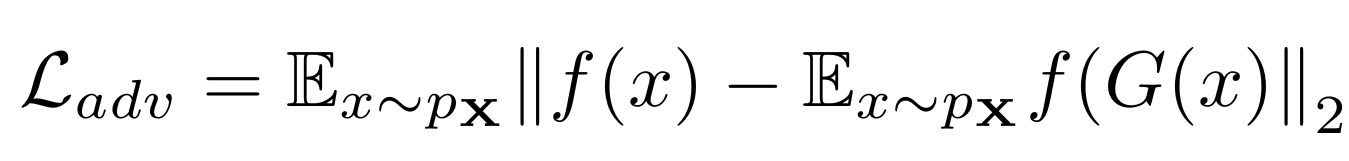

- Adversarial Loss

- feature matching from Salimans et al

- reduce the instability of GAN training

- f is an intermediate layer of the discriminator D

- L2 distance between the feature representation of the original and generated images

- Contextual Loss

- Use L1 loss (L1 was reported to yield less blurry results than L2 in the work of Isola et al)

- Encoder Loss

- The distance between the bottleneck features of the input and the encoded features of the generated image

- For anomalous images, it will fail to minimize the distance between the input and the generated images in the feature space since both G and E networks are optimized towards normal samples only.

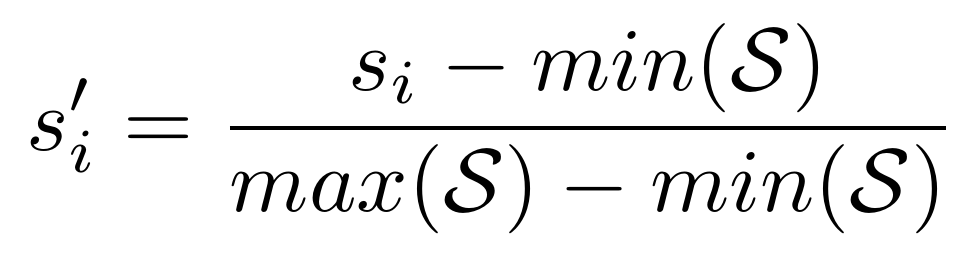

Model Testing

- Anomaly Score

- Feature Scaling to have the anomaly scores within the probabilistic range of [0,1]

Thoughts..

- Encoder-Decoder-Encoder 구조를 제안함.

- encoder-decoder 구조에서 input x 와 reconstructed x'의 feature distance를 구하는 게 더 적절할 것 같다는 느낌

- P(x)가 낮은 샘플 x를 찾고자 하는 이상 탐지 문제에서 latent space에서의 확률 분포 P(z)를 보는 것은 큰 도움이 되지 않는다.

- 이는 모델을 학습할 때에 정상 데이터로만 학습되어 있기 때문에, 비정상 샘플 또한 정상 특징들로만 표현될 것이기 때문.

- "unimodal normality case"일 경우에는 P(x)를 P(z)로부터 접근이 가능하다.

- f-AnoGAN에서는 AdvAE 구조는 abnormal 영역을 생성한다고 보고함.

- 구조가 적합한지에 대한 의문

- 후속 연구인 skip-GANomaly에서는 skip-connection을 이용한 구조를 사용 (U-Net based)

- encoder-decoder 구조에서 input x 와 reconstructed x'의 feature distance를 구하는 게 더 적절할 것 같다는 느낌

- reconstructed image가 궁금하다.

'DeepLearning > GAN' 카테고리의 다른 글

| [Paper Review] GANSpace: Discovering Interpretable GAN Controls (0) | 2020.08.27 |

|---|---|

| [Paper Review]Skip-GANomaly (0) | 2020.08.12 |

| [Paper Review] f-AnoGAN: Fast unsupervised anomaly detection with generative adversarial networks (0) | 2020.08.09 |

| [Paper review] StyleGAN2 (0) | 2020.07.28 |

| [Paper Review] StyleGAN (0) | 2020.07.27 |