Deep Convolutional GAN (DCGAN)

2021. 4. 4. 18:49ㆍ카테고리 없음

Summary:

- Use convolutions without any pooling layers

- Use batchnorm except for the last layer in both the generator and the discriminator

- Don't use fully connected hidden layers

- Use ReLU activation in the generator for all layers except for the output, which uses a Tanh activation.

- Use LeakyReLU activation in the discriminator for all layers except for the output, which does not use an activation

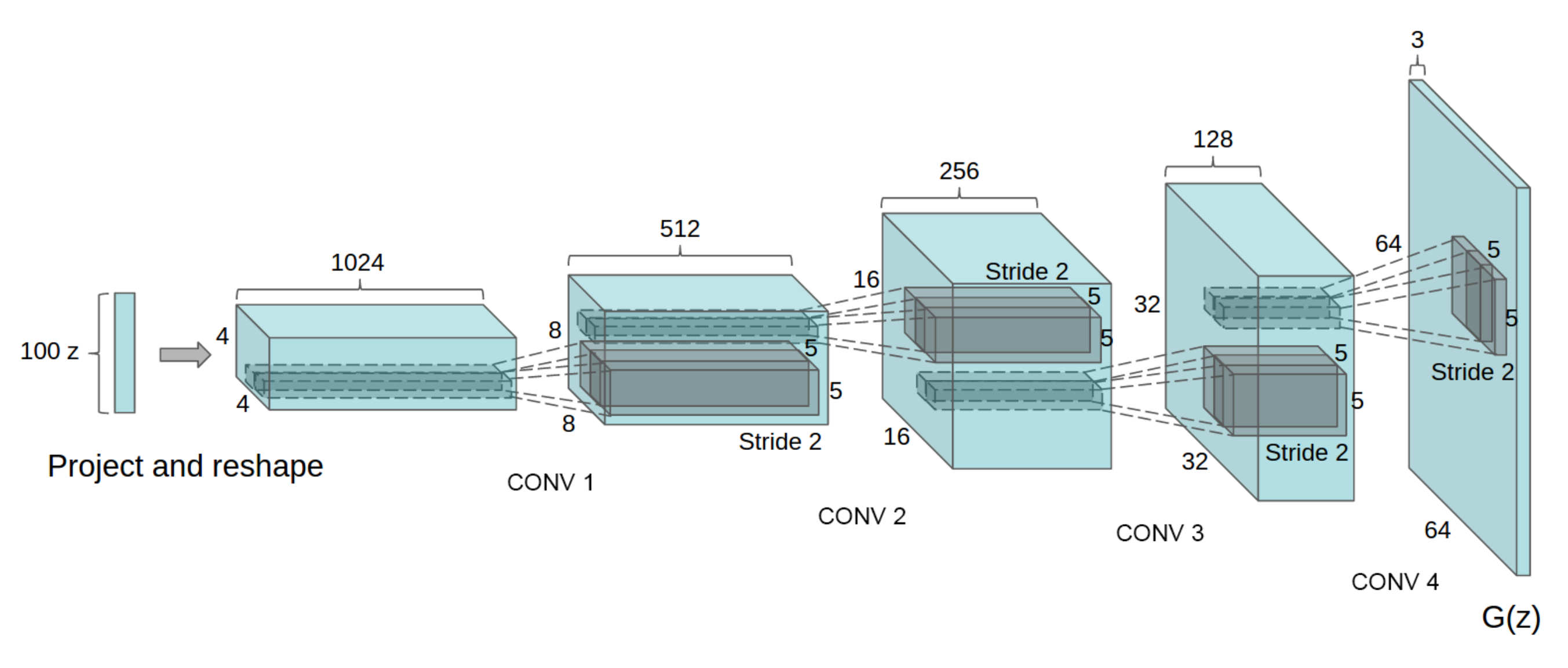

Generator

- if last layer == False: Transposed Conv + BatchNorm + ReLU

- if last layer == True: Transposed Conv + LeakyReLU

Discriminator

- if last layer == False: Conv2d + BatchNorm + LeakyReLU

- if last layer == True: Conv2d

의문점: DCGAN 코드를 보면 번갈아가면서 학습할 때, train()과 eval() 로 모델을 변경하지 않는다. 그 이유는 batch normalization이 충분한 mini-batch를 돈 다음에 안정적으로 변할 수 있기 때문이다. 즉, train()과 eval()로 번갈아 두어 학습하게 되면 더욱 불안정한 학습을 보이게 된다. discuss.pytorch.org/t/why-dont-we-put-models-in-train-or-eval-modes-in-dcgan-example/7422/2

Transposed Convolution

- Transposed convolution learns a filter to up-sample.

- Problem: results have a checkerboard pattern

-

- distill.pub/2016/deconv-checkerboard/

- Current Trend: Upsampling + Convolution, which does not have the checkerboard pattern problem.